5 Dos and Don’ts of A/B Email Testing

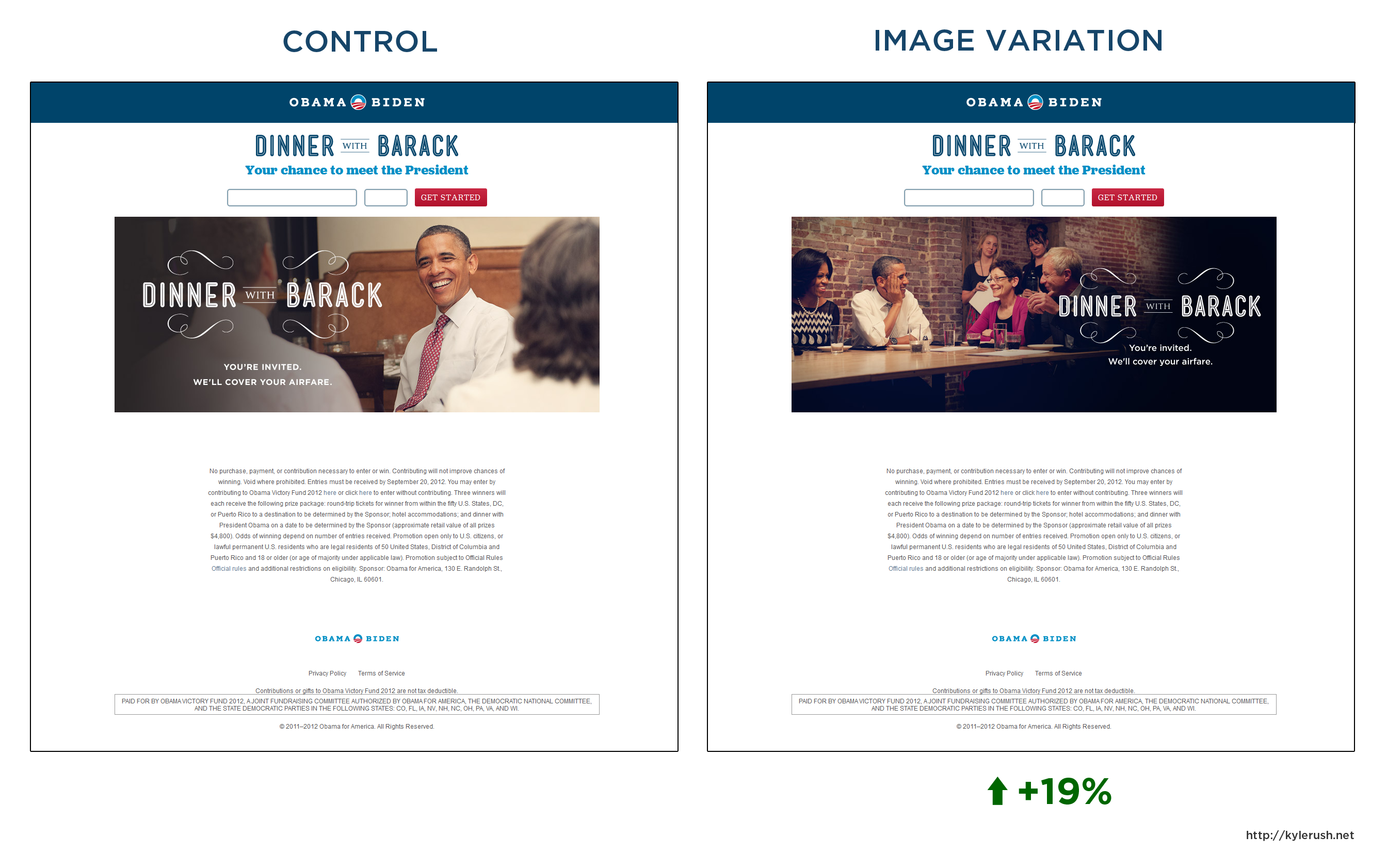

A/B testing can improve almost every area of ecommerce, from web design to landing page copy. In fact, Fast Company recently explored how one of today’s fastest growing online retailers, Adore Me, A/B tests photos of its models to drive sales. A multi-million dollar online lingerie company, AdoreMe proves that science can be sexy.

Because email marketing is still one of the most essential ecommerce strategies, testing your emails can be a profitable game-changer. There is no better way to find out the impact that a design, copy, or external change can have on the success of your emails. But if you’re unfamiliar with A/B testing, it can be an overwhelming project to start. Here’s our starter guide (or a quick refresher for old pros):

The 5 dos:

1. Run tests that have recurring benefits.

If you’ve been doing some online research, you know there are a myriad of factors you can test for in your emails. And although some of them are intriguing (what would happen if you alternated capitals and lowercase in the headline?) some of the tests are unnecessary. Don’t make your testing schedule out of lists on how-to-A/B-test articles. You can, however, use them for inspiration to find factors that are relevant to your specific audience.

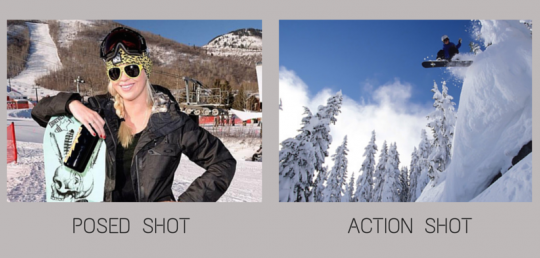

For example, if you’re testing images for a sports apparel company, choose factors like product images on backgrounds, using models in your images, or showing posed shots versus action shots. Try to choose global factors that will apply to all of your future emails before worrying about smaller considerations that won’t appear too often in your campaigns.

2. Test like a scientist.

Do you remember your middle school science fair projects? Ms. Morgan told the class that you needed a control factor, a variable factor, and a hypothesis in order to make a test credible. Well, class is back in session. Make sure you isolate all other factors as much as possible when creating your A and B tests. Preferably, your A test is the control, and is representative of your current email. The B test is almost identical, but altered by one factor.

So if you’re testing subject line length, here is a bad test:

A: Get a move on, pal!

B: T-SHIRTS FROM $4.99 ON SALE NOW DON’T MISS OUT

These two differ on length, punctuation, capitalization, and content. A better test would be:

A: Pick up T-shirts from $4.99

B: Pick up T-shirts from $4.99 in dozens of styles & colors)

Test one thing at a time and keep your sets random. When testing the use of a name in your subject line, don’t direct one of the tests at everyone who has a first name in your email database. That isn’t isolating the variable. When in doubt, ask yourself: what would Ms. Morgan do?

3. Read data as observations.

Data can get confusing if you leave it in the numbers. Test B saw 5% lower open rate but 8% higher click through rate. Yay? Use plain English statements to communicate what happened.

If you are looking at opens:

For everyone who received the email, did this test improve their open rate?

If you are testing for an internal element:

For everyone who opened the email, did this test improve the click through rate? For everyone who opened the email, did the test drive more orders? For everyone who clicked through the email, did this test change their likelihood of ordering?

Breaking down the results of your data clears up what has actually changed and what information is unrelated to your A/B test.

4. Give yourself a reality check.

Don’t run a test you think will be statistically insignificant as it wastes time and resources. Or, if you’re not sure, save those tests for a rainy day email when you don’t have anything to test. So, what makes a test statistically significant?

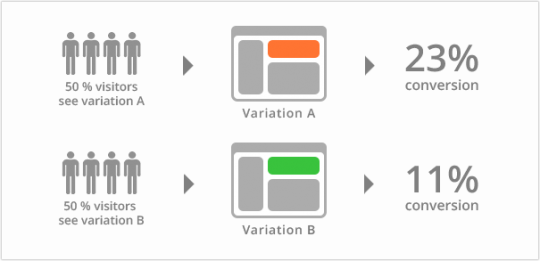

It means enough people have changed their behavior that you are 95% sure the difference in results are not random. For example – if you have 100,000 subscribers and get around 200 orders from an email, how many new orders would make the results significant?

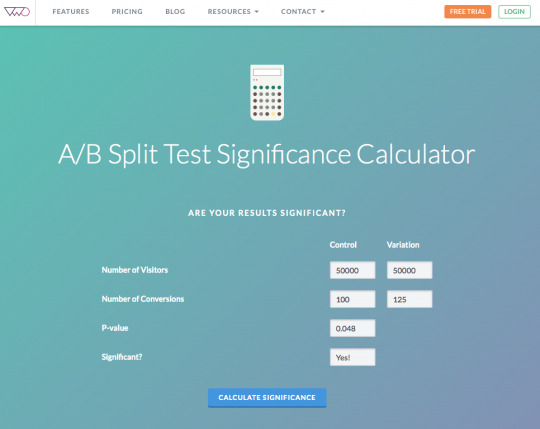

A quick way to find out if you’ve got something big on your hands is to run the numbers through this app on VWO. Here, your control should be your A test (50,0000 visitors and 100 orders), and the variation should be your B test (50,000 visitors and, let’s say, 125 orders). Leave the math up to the machine and if the results are “Yes!”, you’ve got yourself a factor that proven to be impactful.

5. Act on your results.

Once you’ve gotten your data back from the test and have translated it into actionable observations, act on it! If the size of your CTA has increased CTR by a significant amount, change your CTA size. The tests that are significant can often lead to other tests that are significant. Next, try testing the color, copy, or placement of your CTA and see what happens. On the other hand, if moving your product images around isn’t affecting your results, stop testing it. Once you’ve sniffed out a good direction of tests, follow the scent. But remember, one test at a time!

And here are 5 don’ts:

1. Don’t use before and after tests.

Testing one week against another may seem like an ok test as long as all other factors stay the same… but it’s innately flawed. One week could be the very reason it does better or worse, skewing the results of your test. For an A/B test to be truly valid, you must send the emails at the same time on the exact same day, excluding tests where the factor is delivery time.

2. Don’t over test for your sample size.

Know the limits of your email list. If you have 25k subscribers, you won’t be able to run 4 variations in a single week. Breaking it apart that much would skew your data and prevent you from seeing real results. There’s no hard and fast rule for amount of subscribers vs. numbers of variations, but as you break apart your sample size, the results become less significant for each test. Again, use the VWO calculator to see what kind of results you need for each variation.

3. Don’t test factors that are one-email specific.

Sometimes your one-off emails have an exciting topic or provide the opportunity for new A/B tests. But if you will only have a Happy New Years email once a year, and you’re not advertising products in them, don’t bother testing the size of your snowflake graphic. Testing changes that have reoccurring applications means they’ll have a bigger impact on your business. Focus on tests that may be less exciting for the moment, but much more impactful in the long run.

4. Don’t assume results are permanent.

The very reason for A/B testing is the unpredictable and invariably changing ecommerce landscape. With that in mind, don’t ever set an A/B test result in stone. Not only do you need to test a factor multiple times, as environmental factors and chance could create varied results, but you’ll need to question these results every several months to a couple of years depending on the nature of the testing factor.

CTA variable findings could last for years, while “trendy” changes in subject lines (like personalization) could feel old to recipients in a matter of months. Has your audience grown sick of emojis? Do you want your brand to appeal to a new, younger segment with punchier copy? If everyone is doing it, it’s not cool anymore. And remember, you’re working with 95% confidence intervals, not 100%. Better safe than sorry.

5. Don’t call it too early.

It’s great to be so excited for the results to pour in that you’re refreshing Mail Chimp (or Mail Kimp for you Serial listeners) every 30 seconds. But remember that the first two hours of campaign responses are not representative of the whole sample. Early responders may react differently than those that take a while to find and open your email. A good standard is to wait at least 24 hours before examining your data. (But the cool kids give it 48 hours.)

There’s a lot of wiggle room when it comes to A/B testing. If you mess up one test, there is plenty of time to try again. Find your footing through research and experience, and confidence will come with time. As a wise man (it was either Da Vinci or our marketing director, Dave) once said, “A/B testing is a science; learning what to A/B test is an art.”

Let’s get started.

Find out how we can help your ecommerce strategy.

Get in touch

If you’re a rockstar with big ideas, join our team.